Picture taken by Tao Pang

I am a third-year PhD student at MIT, where I am advised by Prof. Russ Tedrake in the Robot Locomotion Group.

My research aims to scale realistic simulations for generating training data and evaluation benchmarks for robotic manipulation foundation models. I enjoy combining model-based and learning-based methods to tackle this challenge.

Before joining MIT, I completed my MEng at Imperial College London and spent nine months at Ocado Technology, where I worked on parallel yaw pick-and-place systems at scale.

Outside of academia, I am passionate about rock climbing, backpacking, Latin social dancing, and specialty coffee.

News

- Oct 2025 Gave an invited talk at the IROS Multimodal Robot Learning in Physical Worlds workshop, watch the recording here

- Sep 2025 Gave an invited talk and participated in a panel discussion at the CoRL Learning to Simulate Robot Worlds workshop

- Aug 2025 Gave an invited talk at TRI on "Achieving Simulation Diversity for Pretraining and Evaluation of Robot Foundation Models"

- Jun 2025 Featured in the MIT CSAIL Student Research Spotlight

- May 2025 Received the Graduate Teaching Award for the MIT Schwarzman College of Computing (presented annually to one faculty member or teaching assistant) for TAing MIT's Robotic Manipulation class

- May 2024 Joined Toyota Research Institute's Large Behavior Models team in Los Altos for an internship

- Sep 2023 Joined the Robot Locomotion Group for my Ph.D.

- Sep 2022 Received the Head of Department's prize from Imperial College London for being the top performing student in my course

Research

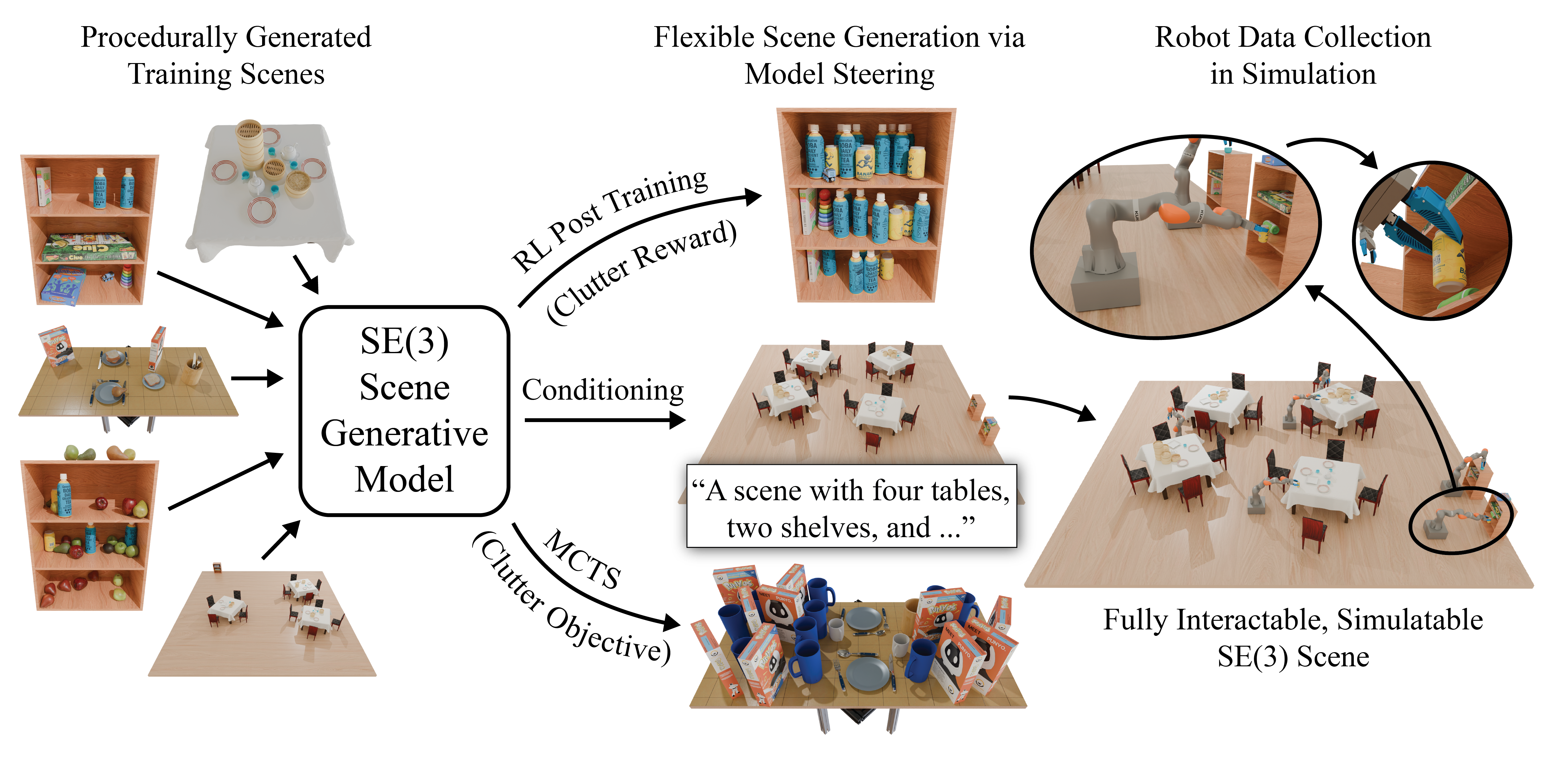

Steerable Scene Generation with Post Training and Inference-Time Search

Steerable Scene Generation with Post Training and Inference-Time Search

Nicholas Pfaff,

Hongkai Dai,

Sergey Zakharov,

Shun Iwase,

Russ Tedrake

Conference on Robot Learning (CoRL 2025)

Best Poster award at RoboGen

Workshop, IROS 2025

Website •

ArXiv •

GitHub •

News

Coverage

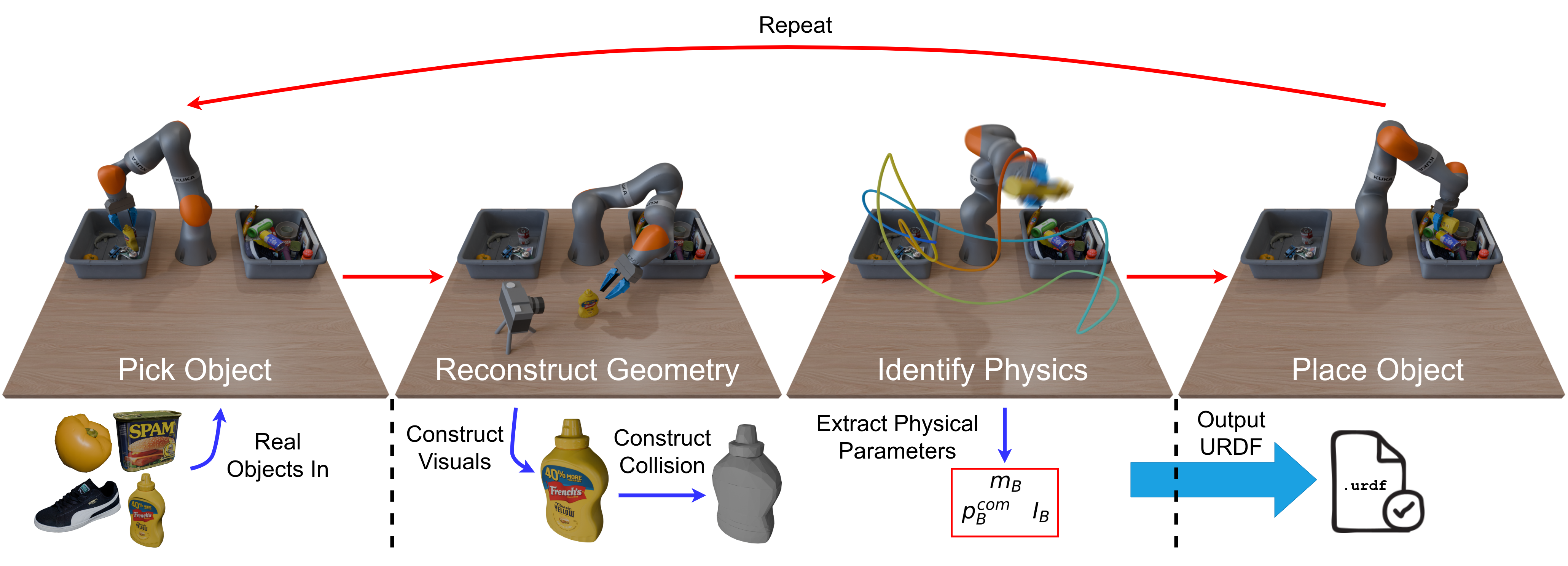

Scalable Real2Sim: Physics-Aware Asset Generation Via Robotic Pick-and-Place Setups

Scalable Real2Sim: Physics-Aware Asset Generation Via Robotic Pick-and-Place Setups

Nicholas Pfaff,

Evelyn Fu,

Jeremy Binagia,

Phillip Isola,

Russ Tedrake

International Conference on Intelligent Robots and Systems (IROS 2025)

New England Manipulation Symposium (NEMS 2025), Oral

Website •

ArXiv •

GitHub

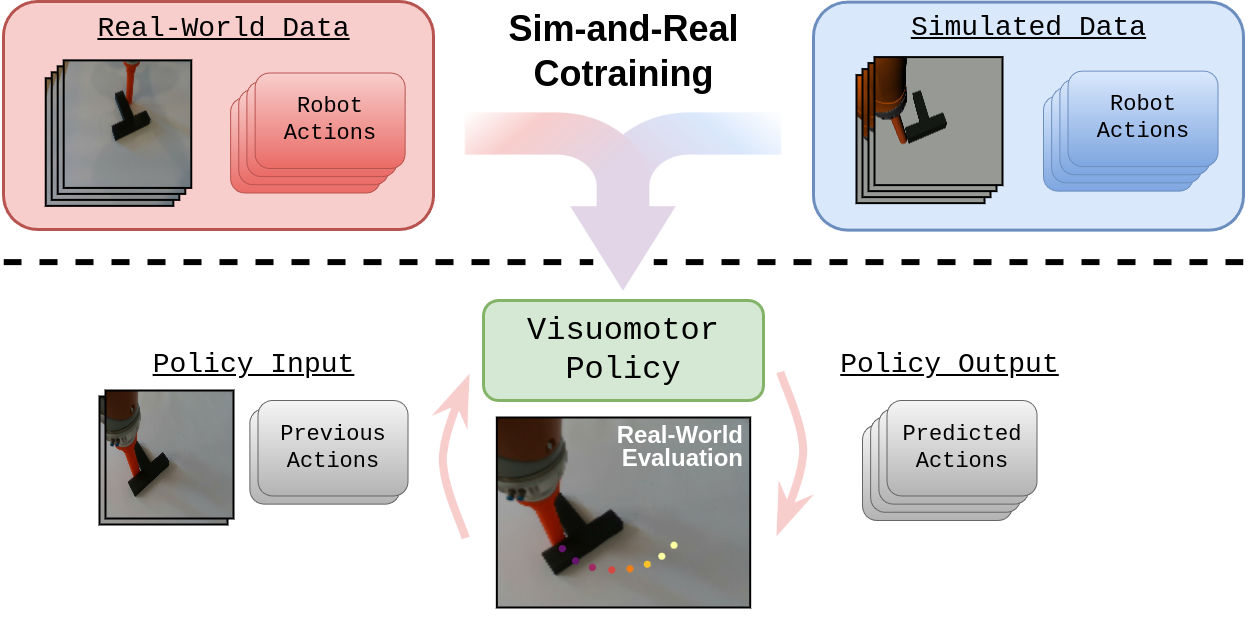

Empirical Analysis of Sim-and-Real Cotraining of Diffusion Policies for Planar Pushing from Pixels

Empirical Analysis of Sim-and-Real Cotraining of Diffusion Policies for Planar Pushing from Pixels

Adam Wei,

Abhinav Agarwal,

Boyuan Chen,

Rohan Bosworth,

Nicholas Pfaff,

Russ Tedrake

International Conference on Intelligent Robots and Systems (IROS 2025)

Website •

ArXiv

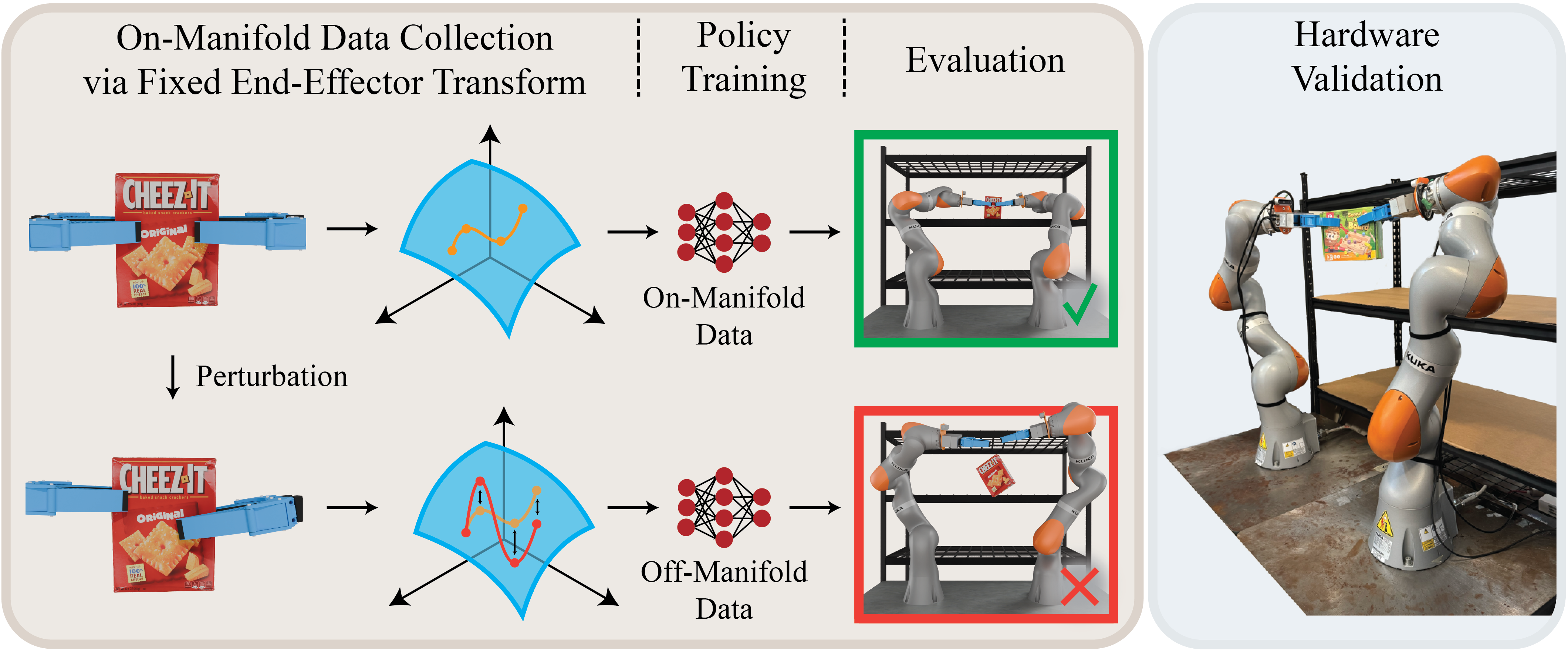

How Well do Diffusion Policies Learn Kinematic Constraint Manifolds?

How Well do Diffusion Policies Learn Kinematic Constraint Manifolds?

Lexi Foland,

Thomas Cohn,

Adam Wei,

Nicholas Pfaff,

Boyuan Chen,

Russ Tedrake

Under Review

Best Poster award at RoDGE

Workshop, IROS 2025

Website •

ArXiv

Outside the Lab

I enjoy staying active in my free time. These days, that means bouldering several times a week. In the past, I competed in long-distance running, rowing, and Latin social dancing. During holidays, I often trek, backpack, or cycle in different parts of the world.